📬 Weekly AI Catch Up: This Week in AI & GenAI - Unlimited Context Window and User Friendly UX for Autonomous GenAI Agents

AI Advancements, Industry Updates, Latest Papers, and Food for Thought: Your Weekly Roundup.

Welcome to the latest roundup of groundbreaking developments in the world of artificial intelligence!

This week witnessed an array of innovations that promise to reshape how we interact with and harness the power of AI. From user-friendly runtimes for autonomous language models (LLMs) to game-changing advancements in managing unlimited context, the landscape of AI is evolving at an unprecedented pace. Notably, we delve into the emergence of AI-driven products in critical sectors like the military, hinting at a future where AI's influence extends far beyond its current domains. Let's explore the highlights of this week's AI news, ranging from transformative technological breakthroughs to intriguing insights into the ethical and societal implications of AI advancement.

🧠 GoEX: User Friendly Runtime for Autonomous LLM Applications.

How can we work effectively with advanced language models (LLMs)? This paper suggests a system where humans can check the actions suggested by LLMs after they're generated, rather than before. By adding features like an LLM-undo button and limits on potential risks, we can let LLMs interact with applications with less direct human oversight. http://www.arxiv.org/abs/2404.06921

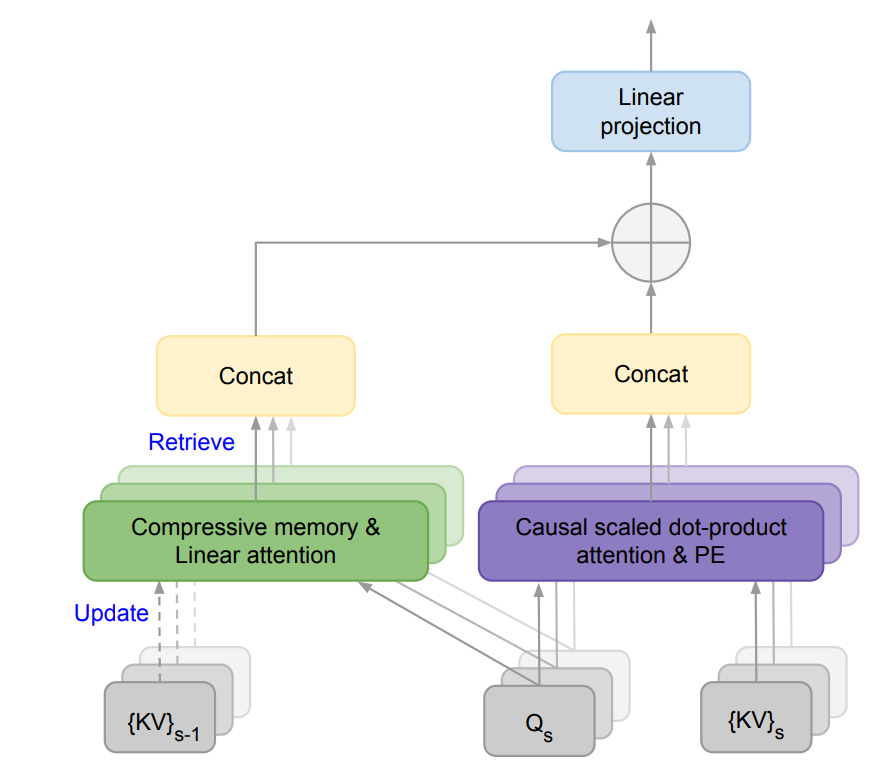

🔥🚀 Efficient Infinite Context Transformers with Infini-attention: Infini-attention, a new architecture by Google, revolutionizes long-context data management for enterprise LLM solutions. It incorporates compressive memory and enhances long-context language tasks creating a way for a infinite context window size processing. Its plug-and-play adaptability and real-time inference capabilities make it a game-changer. My post about it on linkedIn

🔥📰 And, as a powerful combination - Meta's Megalodon: LLM Pretraining with Unlimited Context. Efficient LLM pretraining and inference.

How can we efficiently handle long sequences in large language model (LLM) pretraining and inference? Introducing Megalodon, a novel neural architecture designed to overcome the limitations of traditional Transformers. With features like complex exponential moving average (CEMA) and normalized attention mechanism, Megalodon demonstrates superior efficiency compared to Transformers in large-scale settings, achieving promising results in both pretraining efficiency and downstream task accuracy. [Paper] http://arxiv.org/abs/2404.08801

AI x Robotics

🤖 Train your robots, don't program them: Example on Learning agile soccer skills for a bipedal robot. Deep reinforcement learning teaches soccer skills - The trained agent performed significantly better than a manually programmed one, showing promise for complex robot interactions. http://science.org/doi/10.1126/scirobotics.adi8022

🪜 Can we apply Reinforcement Learning to our daily products?

Body Design and Gait Generation of Chair-Type Robot.

Have you ever wondered how far AI and Reinforcement Learning can be integrated in our everyday products? JSK Robotics Laboratory, University of Tokyo, explored just that, training their chairs in Omniverse / Isaac Sim to stand up. It still looks funny today, but could be a preview of intelligent products that will soon be released. [Link] http://shin0805.github.io/chair-type-tripedal-robot/

News from the Industries:

✈️ First successful AI dogfight by the US Air Force: DARPA conducted a successful dogfight test, pitting an AI-controlled aircraft against a human pilot. The AI system, part of DARPA's Air Combat Evolution program, autonomously flew an experimental X-62A aircraft and engaged in dogfight simulations. Despite having human pilots onboard with safety controls, the AI performed admirably, showcasing its potential in aerial combat.

[theverge.com/2024/4/18/24133870/us-air-force-ai-dogfight-test-x-62a]

🤖🔐 How dangerous are open-source unrestricted models? Can open-weight models like Command R+ be used for unethical tasks? Using tools and custom setups, the agent Commander digs up negative information, attempts blackmail, and even harassment. As AI advances, it's crucial to understand and address the risks associated with such models. [lesswrong.com/posts/4vPZgvhmBkTikYikA/creating-unrestricted-ai-agents-with-command-r]

💡 Intel Builds World’s Largest Neuromorphic System to Enable More Sustainable AI: Hala Point, the industry’s first 1.15 billion neuron neuromorphic system, builds a path toward more efficient and scalable AI. Neuromorphic computing is a new approach that draws on neuroscience insights that integrate memory and computing with highly granular parallelism to minimize data movement → be more efficient in computing. [intel.com/content/www/us/en/newsroom/news/intel-builds-worlds-largest-neuromorphic-system.html]

🌆 Prompt-to-Urban-Design-Architecture. Urban Architect: 3D Urban Scene Generation. Can text-to-3D generation tackle the complexity of urban scenes? Generation of large-scale urban scenes covering over 1000m driving distance.

https://urbanarchitect.github.io/

🎓 Why Law Students Should Use ChatGPT - ChatGPT in Law Education. [law.vanderbilt.edu/why-law-students-should-embrace-chatgpt/]

📚 MathGPT: Personalized Learning Platform. Leveraging Llama 2 for personalized learning. Open-source AI tools democratizing personalized education globally. [Blog Meta]

Interesting Food for thought:

🤖 Claude 3 Opus, a novel computational model, showcases remarkable capabilities akin to a Turing machine! Through learning existing tapes and deducing rules, it accurately computes new sequences, boasting a flawless performance of over 500 24-step solutions. Notably, achieving 100% accuracy at 24 steps requires input tapes containing 30,000 tokens. GPT-4 cannot do this. [x.com]

🥶 AI Winter Unlikely Due to Robotics. Robotics' potential to prevent AI winter. [x.com/DrJimFan/status/1781726400854269977].

adding my personal opinion: we are only just beginning with multimodality and virtual environments, which could also lead to a much better understanding of the world model.

🧠 ChatGPT-4's Social Intelligence. ChatGPT-4's superior performance in social intelligence test. [doi.org/10.3389/fpsyg.2024.1353022]

💰 Bet on AI Smarter Than Humans. A significant bet against AI surpassing human intelligence. [garymarcus.substack.com/p/10-million-says-we-wont-see-human]

💭 AI Consciousness: Theoretical Computer Science Perspective. Insights into AI consciousness from a theoretical standpoint. [arxiv.org/abs/2403.17101]

👨💻 Human vs. AI Online Reviews: Can you distinguish between AI-generated and human-written online reviews? Surprisingly, not really, if the system is good! Most people struggle, raising concerns about trust and transparency in online feedback systems. [Springer] http://link.springer.com/article/10.1007/s11002-024-09729-3

Papers for experts:

📰 Google's TransformerFAM: Indefinite Sequence Processing. Novel Transformer architecture fosters working memory. [arxiv.org/abs/2404.09173]

📰 RecurrentGemma: Efficient Open Language Models. Moving beyond Transformers for efficient LLMs. [arxiv.org/abs/2404.07839]

📰 AdapterSwap: Continuous Training of LLMs. Ensuring continuous LLM training with access control. [arxiv.org/abs/2404.08417]

📰 Many-Shot In-Context Learning: Can large language models (LLMs) transition from few-shot to many-shot learning? This study suggests they can. By expanding context windows, researchers observed significant performance boosts across various tasks. However, the availability of human-generated examples can be a bottleneck. To address this, they explore Reinforced and Unsupervised In-Context Learning (ICL). Surprisingly, both methods prove effective, particularly on complex reasoning tasks. Moreover, many-shot learning showcases its ability to overcome pretraining biases and handle high-dimensional functions, challenging traditional indicators of performance.

[arxiv.org/abs/2404.11018]📰 Less is more! Scaling (Down) CLIP: Analysis of Data, Architecture, Training. Comprehensive analysis of CLIP scaling strategies - smaller datasets of high-quality data can outperform larger, lower-quality datasets, and offer guidance on selecting the appropriate model size and architecture based on dataset size and available compute [Arxiv.org] http://arxiv.org/abs/2404.08197